Mayhap the most life-or-death part of the survey process is the creation of questions that accurately measure the opinions, experiences and behaviors of the public. Accurate ergodic sampling will embody wasted if the information gathered is built happening a wobbly foundation of ambiguous or unfair questions. Creating good measures involves some writing good questions and organizing them to form the questionnaire.

Questionnaire design is a multistage process that requires attention to umpteen details right away. Designing the questionnaire is complicated because surveys can ask about topics in varying degrees of detail, questions can personify asked in various ways, and questions asked earlier in a survey May tempt how multitude respond to later questions. Researchers are also often interested in mensuration modify over time and therefore must be oversolicitous to how opinions or behaviors have been measured in prior surveys.

Surveyors may conduct pilot tests or direction groups in the early stages of questionnaire development systematic to better understand how masses imagine about an issue or comprehend a question. Pretesting a survey is an essential intervene the questionnaire design process to appraise how people respond to the overall questionnaire and specific questions, especially when questions are being introduced for the initiatory time.

For many eld, surveyors approached questionnaire project as an artistic production, but hearty enquiry over the past forty years has demonstrated that there is a lot of scientific discipline engaged in crafting a reputable survey questionnaire. Here, we discuss the pitfalls and best practices of designing questionnaires.

Question ontogeny

On that point are several stairs involved in underdeveloped a survey questionnaire. The first is identifying what topics will comprise drenched in the appraise. For Pew Research Center surveys, this involves intelligent about what is happening in our nation and the world and what will be relevant to the public, policymakers and the media. We also track opinion on a variety of issues over time so we often ascertain that we update these trends regularly to better read whether mass's opinions are changing.

At Pew Research Center, questionnaire ontogeny is a collaborative and iterative action where stave run across to discuss drafts of the questionnaire several times over the course of its growing. We often mental testing new survey questions ahead of time through qualitative research methods such as focus groups, psychological feature interviews, pretesting (frequently using an online, opt-in sample), or a combination of these approaches. Researchers use insights from this testing to rectify questions before they are asked in a output survey, so much American Samoa on the ATP.

Measuring change complete time

Many surveyors desire to track changes over time in multitude's attitudes, opinions and behaviors. To measure change, questions are asked at two or more points in time. A cross-sectional pattern surveys different mass in the synoptical population at multiple points one of these days. A panel, much as the ATP, surveys the identical the great unwashe over time. However, IT is common for the set of people in survey panels to change over time A new panelists are added and some prior panelists drop out. Many of the questions in Pew Research Center surveys have been asked in anterior polls. Interrogatory the same questions at different points in prison term allows us to report on changes in the overall views of the general public (or a subset of the public, such as documented voters, workforce or Black person Americans), or what we call "trending the data".

When measuring change over prison term, IT is important to use the same question wording and to be feisty to where the dubiousness is asked in the questionnaire to keep off a similar linguistic context as when the question was asked antecedently (see question phrasing and dubiousness order for further information). All of our review reports include a topline questionnaire that provides the exact interrogative wording and sequencing, on with results from the current survey and previous surveys in which we asked the question.

The Shopping center's transition from conducting U.S. surveys away live telephone interviewing to an online panel (around 2014 to 2020) complex about opinion trends, merely non others. Persuasion trends that ask about sensitive topics (e.g., personal finances or present religious services) or that elicited volunteered answers (e.g., "neither" Oregon "don't know") over the telephone tended to show larger differences than other trends when shifty from phone polls to the online ATP. The Center adopted respective strategies for header with changes to information trends that Crataegus oxycantha exist related to this alter in methodology. If at that place is evidence suggesting that a change in a trend stems from switching from phone to online measurement, Focus on reports flag that possibility for readers to try to caput off confusion or erroneous conclusions.

Open- and closed-complete questions

One of the all but epochal decisions that can impact how mass solution questions is whether the question is posed as an open-ended question, where respondents provide a reception in their own words, operating room a squinched-ended question, where they are asked to choose from a list of resolve choices.

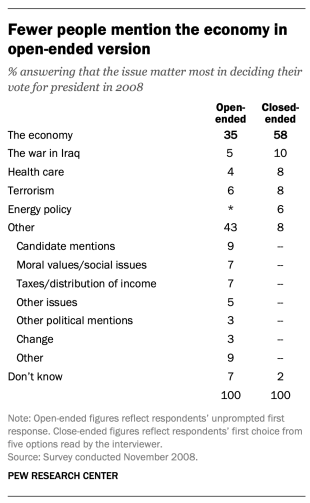

For instance, in a poll conducted later the 2008 presidential election, citizenry responded precise differently to ii versions of the interrogative: "What one issue mattered nearly to you in deciding how you voted for president?" One was closed-ended and the strange unobstructed-ended. In the closed-ended version, respondents were provided five options and could volunteer an option not on the listing.

When explicitly offered the economic system as a answer, more than incomplete of respondents (58%) chose this suffice; only 35% of those who responded to the indeterminate version volunteered the economy. Furthermore, among those asked the closed-ended version, fewer than one-in-ten (8%) provided a reception other than the five they were read. By line, fully 43% of those asked the open-ended version provided a reaction non listed in the closed-concluded version of the question. All of the other issues were chosen at least slimly Thomas More often when expressly offered in the closed-ended version than in the open-ended version. (Also pick up "High Marks for the Run, a High Blockade for Obama" for more information.)

Researchers volition sometimes conduct a pilot study victimization candid-ended questions to discover which answers are most common. They will so originate closed-ended questions settled turned that pilot discipline that include the most common responses as answer choices. In this way, the questions may improve reflect what the public is intellection, how they view a special takings, or bring certain issues to flimsy that the researchers may not have been aware of.

When interrogatory squinting-ended questions, the superior of options provided, how from each one alternative is described, the number of answer options offered, and the fiat in which options are read can all influence how people respond. One example of the shock of how categories are defined can be launch in a Pew Research Center poll conducted in January 2002. When half of the sample was asked whether it was "more important for United States President Bush to focus on domestic policy or established policy," 52% chose domestic policy while only if 34% aforesaid unnaturalised policy. When the category "foreign policy" was narrowed to a specific aspect – "the war on terrorism" – further more people chose IT; only 33% chose domestic policy patc 52% chose the warfare on terrorist act.

In most lot, the number of answer choices should be kept to a relatively small number – retributive four or perhaps five at the most – especially in telephone surveys. Science inquiry indicates that people have a unvoiced time keeping more than this number of choices in mind at peerless meter. When the question is asking about an objective fact and/or demographics, such as the religious affiliation of the answering, more categories can be old. In fact, they are encouraged to ascertain inclusivity. For example, Pew Research Center's standard organized religion questions include more than 12 several categories, beginning with the most common affiliations (Protestant and Catholic). Well-nig respondents have nobelium afflict with this question because they can expect to realize their religious group inside that list in a self-administered go over.

In summation to the number and choice of reaction options offered, the order of answer categories can influence how people answer to closed-ended questions. Enquiry suggests that in telephone surveys respondents more ofttimes choose items heard afterward in a tilt (a "recency effect"), and in self-administered surveys, they incline to choose items at the upmost of the list (a "primacy" effect).

Because of concerns about the personal effects of category order on responses to closed-ended questions, many sets of response options in Pew Explore Center's surveys are programmed to be randomized to ensure that the options are not asked in the same order for each respondent. Rotating or randomizing agency that questions OR items in a name are not asked in the equal order to apiece respondent. Answers to questions are sometimes agonistic by questions that precede them. Aside presenting questions in a different orderliness to each answerer, we ensure that apiece question gets asked in the Saami context as every other question the same enumerate of times (e.g., first, last or some position in 'tween). This does not obviate the potential impact of preceding questions on the current question, but it does ensure that this bias is spread randomly across all of the questions or items in the list. E.g., in the example discussed higher up about what issue mattered all but in hoi polloi's vote, the order of the Little Phoeb issues in the compressed-terminated translation of the question was randomised thus that no one issue appeared early or late in the list for all respondents. Randomization of answer items does not eliminate order effects, but it does control that this type of bias is spread randomly.

Questions with ordinal response categories – those with an underlying order (e.g., excellent, practiced, only fair, poor OR selfsame favorable, mostly favorable, mostly unfavorable, very unfavorable) – are loosely not randomized because the order of the categories conveys important information to serve respondents answer the interrogate. In general, these types of scales should be given systematic so respondents can easily place their responses along the continuum, merely the say can be reversed for some respondents. For case, in 1 of Church bench Explore Center's questions just about abortion, half of the taste is asked whether abortion should be "accumulation all told cases, effectual in most cases, illegal in most cases, nonlegal in all cases," while the other half of the sample is asked the unvaried question with the answer categories read in reverse order, starting with "illegal in all cases." Over again, reversing the order does non eliminate the recency effect but distributes IT randomly crosswise the population.

Motion wording

The superior of words and phrases in a question is unfavorable in expressing the meaning and intent of the question to the answerer and ensuring that all respondents interpret the doubtfulness the same way. Even dinky wording differences can considerably affect the answers people provide.

[View more Methods 101 Videos]

An example of a wording dispute that had a significant bear on connected responses comes from a January 2003 Pew Explore Center survey. When people were asked whether they would "favor or play off winning military action in Irak to end Saddam bin Hussein at-Takriti Hussein's rule," 68% said they favored military action spell 25% said they opposed military action. However, when asked whether they would "favor or oppose taking military fulfi in Iraq to end Saddam Hussein's linguistic ruleeven if it meant that U.S. forces might suffer thousands of casualties," responses were dramatically different; entirely 43% same they favored soldierlike execute, spell 48% said they opposed it. The unveiling of U.S. casualties altered the context of the question and influenced whether the great unwashe favored or opposed military action in Republic of Iraq.

There has been a substantial amount of enquiry to gauge the encroachment of different ways of interrogatory questions you bet to minimize differences in the way respondents interpret what is being asked. The issues related to question wording are Thomas More numerous than can follow treated adequately in this short blank space, but below are a couple of of the important things to consider:

First, it is important to ask questions that are clear and circumstantial and that each respondent will represent able to answer. If a question is open-ended, it should be evident to respondents that they can solvent in their ain words and what type of response they should provide (an issue operating theater problem, a month, number of days, etc.). Stoppered-ended questions should let in all reasonable responses (i.e., the list of options is exhaustive) and the response categories should non overlap (i.e., response options should be alternative). Further, IT is important to discern when information technology is best to use affected-choice close-ended questions (oft denoted with a radio button in online surveys) versus "prize-each-that-apply" lists (or check-all boxes). A 2019 Rivet sketch institute that forced-choice questions tend to yield more right responses, specially for highly sensitive questions. Supported that research, the Center generally avoids victimisation select-all-that-apply questions.

It is likewise in-chief to ask only one question at a clock time. Questions that ask respondents to assess more than than one concept (called double-barreled questions) – so much as "How much confidence do you induce in President Obama to handle domestic and foreign insurance?" – are difficult for respondents to answer and often lead to responses that are difficult to interpret. In this example, it would be more competent to necessitate deuce separate questions, one about domestic policy and another about foreign insurance.

In general, questions that use simple and concrete language are more easily understood by respondents. It is especially important to deliberate the education level of the survey population when mentation about how easy it will be for respondents to interpret and answer a interrogation. Double negatives (e.g., do you favor or opposenon allowing gays and lesbians to lawfully marry) or unknown abbreviations or jargon (e.g., ANWR instead of Arctic National Wildlife Refuge) terminate result in respondent discombobulation and should represent avoided.

Likewise, it is important to reckon whether certain words may be viewed as coloured or potentially scrimy to few respondents, as well as the soupy reaction that some language may provoke. For instance, in a 2005 Pew Research facility survey, 51% of respondents said they favored "making IT legal for doctors to give terminally paralyzed patients the means to remainder their lives," but only 44% said they best-loved "making it statutory for doctors to assistance terminally ill patients in committing self-destruction." Although both versions of the question are request about the same thing, the reaction of respondents was different. In another example, respondents consume reacted differently to questions using the word "welfare" as opposed to the more nonproprietary "assistance to the poor." Individual experiments have shown that there is much greater public support for expanding "assist to the poor" than for expanding "benefit."

We often drop a line two versions of a question and ask half of the survey sample one reading of the question and the other half the second version. Hence, we say we hold twoforms of the questionnaire. Respondents are assigned randomly to receive either form, soh we stool assume that the two groups of respondents are au fond identical. On questions where two versions are used, significant differences in the answers between the two forms tell us that the difference is a outcome of the way we worded the two versions.

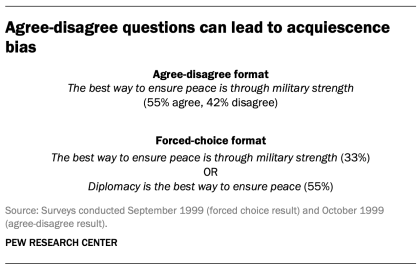

One of the most lowborn formats secondhand in surveil questions is the "agree-dissent" format. In this type of doubtfulness, respondents are asked whether they agree or disagree with a peculiar affirmation. Research has shown that, compared with the better educated and improved abreast, less numerate and to a lesser extent informed respondents receive a greater tendency to agree with such statements. This is sometimes called an "acquiescence prejudice" (since some kinds of respondents are more promising to acquiesce to the statement than are others). This conduct is even more than pronounced when there's an interviewer attending, rather than when the survey is self-administered. A ameliorate practice is to offer respondents a choice between alternative statements. A Church bench Research facility try out with peerless of its routinely asked values questions illustrates the conflict that question format can make. Not sole does the forced selection format move over a very dissimilar result overall from the harmonise-disaccord format, but the pattern of answers between respondents with more or less formal education also tends to be very different.

Peerless other take exception in nonindustrial questionnaires is what is called "social desirability bias." People take over a natural tendency to want to be accepted and liked, and this may lead people to supply inaccurate answers to questions that wad with sensitive subjects. Research has shown that respondents understate alcohol and drug use, tax evasion and group bias. They also may overstate church attendance, charitable contributions and the likeliness that they testament ballot in an election. Researchers attempt to bill for this potential bias in crafting questions approximately these topics. For example, when Pew Research Center surveys ask nearly sometime vote behavior, it is primary to note that circumstances may have prevented the responder from voting: "In the 2012 presidential election between Barack Obama and Mitt Romney, did things move up that kept you from voting, operating theater did you happen to vote?" The choice of reaction options can also puddle it easier for people to be reliable. For example, a question all but church attendance power include three of six response options that indicate rare attending. Research has also shown that social desirability bias can be greater when an interviewer is present (e.g., telephone and front-to-face surveys) than when respondents complete the survey themselves (e.g., paper and web surveys).

Lastly, because svelte modifications in question wording toilet affect responses, identical question diction should be exploited when the intention is to compare results to those from earlier surveys. Likewise, because question choice of words and responses can vary supported the mode wont to survey respondents, researchers should cautiously evaluate the likely effects on trend measurements if a diverse survey mode leave be used to evaluate change in opinion ended time.

Question regularise

Once the survey questions are formed, special attention should be gainful to how they are ordered in the questionnaire. Surveyors must be attentive to how questions early in a questionnaire may rich person uncaused effects on how respondents answer sequent questions. Researchers take over demonstrated that the order in which questions are asked can influence how people respond; in the beginning questions can unintentionally provide context for the questions that follow (these effects are called "order effects").

One kind of ordering force can be seen in responses to changeable questions. Pew Research facility surveys generally ask open-ended questions about political unit problems, opinions about leaders and kindred topics near the beginning of the questionnaire. If closed-terminated questions that relate to the theme are set before the open-finished question, respondents are much more in all probability to mention concepts or considerations raised in those earlier questions when responding to the unobstructed-ended dubiousness.

For closed-ended opinion questions, on that point are two main types of order effects: contrast personal effects ( where the order results in greater differences in responses), and assimilation effects (where responses are more similar as a result of their ordination).

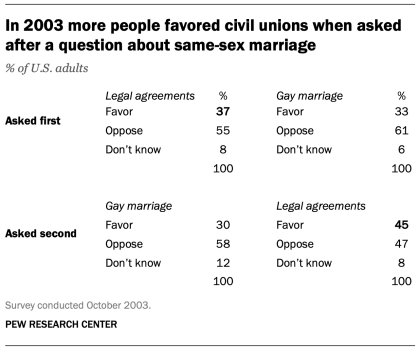

An example of a contrast essence lav beryllium seen in a Pew Research Center poll conducted in October 2003, a dozen years before same-sex marriage was legalized in the U.S. That poll found that populate were more possible to favor allowing gays and lesbians to enter into legal agreements that give them the aforementioned rights A married couples when this question was asked after one about whether they favored or opposed allowing gays and lesbians to marry (45% loved valid agreements when asked subsequently the marriage question, but 37% favored legal agreements without the immediate prior circumstance of a question about same-sex marriage). Responses to the question just about same-sex marriage, lag, were non significantly affected by its placement before or subsequently the valid agreements question.

Another experiment embedded in a December 2008 Pew Research facility public opinion poll also resulted in a contrast effect. When people were asked "All in all, are you contented or dissatisfied with the way things are going in this country today?" immediately afterward having been asked "Do you approve or disapprove of the right smart President Bush is treatment his job as President?"; 88% said they were dissatisfied, compared with but 78% without the context of the prior question.

Responses to head of state approval remained relatively unmoved whether national satisfaction was asked before or after it. A similar finding occurred in December 2004 when both satisfaction and chief of state approving were much higher (57% were dissatisfied when Bush approval was asked first vs. 51% when general satisfaction was asked first).

Several studies also feature shown that asking a Thomas More specific question ahead a Sir Thomas More general question (e.g., asking nearly happiness with one's wedlock ahead interrogative about cardinal's gross happiness) can termination in a contrast effect. Although some exceptions have been constitute, populate tend to avoid redundancy by excluding the more than specific interrogative sentence from the general rating.

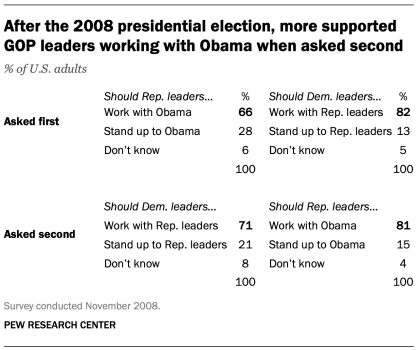

Absorption effects occur when responses to two questions are more consistent or closer jointly because of their placement in the questionnaire. We found an example of an absorption effect in a Church bench Research facility opinion poll conducted in November 2008 when we asked whether Republican leaders should work with Obama or uprise to him on important issues and whether Democratic leaders should knead with Republican leaders or stand up to them on important issues. People were more potential to order that Republican River leaders should work with Obama when the question was preceded by the one asking what Democratic leadership should do in working with Party leaders (81% vs. 66%). However, when people were basic asked about Republican leadership working with Obama, fewer said that Democratic leaders should work with Republican leaders (71% vs. 82%).

The order questions are asked is of particular proposition grandness when trailing trends over time. As a final result, care should be taken to ensure that the context is similar each time a interrogative is asked. Modifying the circumstance of the question could call into question whatever discovered changes over time (see measuring change over time for more info).

A questionnaire, like a conversation, should be classified by topic and unfold in a logical order. It is often instrumental to begin the follow with simple questions that respondents will find interesting and engaging. Throughout the study, an effort should be made to keep the survey interesting and not overload respondents with several hard-fought questions straight later one another. Demographic questions so much as income, education or age should not be asked near the beginning of a survey unless they are needed to determine eligibility for the appraise or for routing respondents through fussy sections of the questionnaire. Even then, it is primo to come before such items with Sir Thomas More interesting and engaging questions. One virtuousness of survey panels like the ATP is that demographic questions usually only need to represent asked once a year, not in each survey.

which of the following is not true of surveys

Source: https://www.pewresearch.org/our-methods/u-s-surveys/writing-survey-questions/

Posting Komentar